Ranking Arab Universities: A Farce

American University of Beirut (Photo Credit: Wikipedia)

American University of Beirut (Photo Credit: Wikipedia)

By Rigas Arvanitis and Sari Hanafi

In November 2014, US News & World Report, extending its previous experience, published the “Best Arab Region Universities.” According to this ranking, the “best” five universities in the Arab region are: King Saud University (Riyad, Saudi Arabia [SA]), King Abdulaziz University (Jeddah- SA), King Abdullah University of Science & Technology (Thuwal, SA), Cairo University, and the American University of Beirut. Beyond this overall ranking, the newspaper offers rankings in each scientific field, an approximation to academic disciplines. While the whole concept of ranking is problematic, the ranking concerning the social science and humanities (SSH) is fundamentally flawed since most of SSH production is in Arabic and the Arabic-language journals are not indexed by Elsevier’s Scopus.

Ranking universities is very related to the idea of the knowledge economy. Although the most famous ranking has been the well-known rankings produced by Jiaotong University in Shanghai, it was not the first one. Doctoral schools in the United States had been ranked by the National Research Council in 1982 and US News and World Report produced their first ranking of undergraduate university programs in 1983. Business Week (1988) and the Financial Times (1989) has also produced a ranking of MBAs in the United States and the United Kingdom. Nonetheless, the Shanghai ranking in 2003 was a shock to the system because of its worldwide scope and the rare mix of indicators that included publications and Nobel prizes. The origin of this ranking is interesting by itself: it was supposed to provide a list of good (or eligible) universities for Chinese students receiving scholarships for studies abroad. In other words, it was supposed to provide guidance to governments on how to upgrade the academic quality of its doctoral students going abroad that were also expected to return with a prestigious diploma.

In some countries, alternative rankings have been proposed, including in Germany (CHE ranking in 2002 and post-grad ranking in 2007), France (Ecole des Mines ranking in 2007), and the United Kingdom (Times Higher Education in 2004). The European Union has been quite attentive to the generalization of the ranking of universities and tried to promote alternative ways of thinking that would replace the notion of ranking by the notion of “strategic positioning” proposed by indicator specialists in Europe.

The huge success of these rankings can be explained by many factors: the globalization of research and higher education as a collection of competitive markets; the market for universities, students, professors and publications; the close connection that private universities establish between the salaries and “prestige” as measured apparently by rankings; the development of evaluation procedures based on indicators instead of the peer assessments that promote individual success, “excellence”; the further de-regulation and privatization of higher-education in countries where research and higher education were part of the world image of hegemony (in the case of the United Kingdom for example or the resistance of the French university and engineering schools system to rankings). The predominance of metrics that relate evaluation to simple performance indicators has also been at the very heart of New Public Management, and more generally of managerial approaches to research and higher education policy. Evidently, those promoting ideological privatization and less state involvement in the economy will favor these rankings and metrics of excellence.

More recently, the debates have left the criticism of rankings that seems relatively inefficient to the promotion of impact measurement. A whole new field for research evaluation is thus emerging. In the meantime, US News continues to produce its rankings regularly, a nice commercial venture since readers of magazines and newspapers find it re-assuring to locate their school in those rankings. It is very little known how much of this effort actually influences decisions to choose a school, but it certainly has a nice impact on sales of the newspapers. While other rankings take into account teaching and research (such as Times Higher Education), US News focuses only on one research output: the indexed publication in Scopus.

The Arab countries, mainly in the Gulf, have actively promoted commercially-based universities, either public or private, close to this worldwide market of competences, where money buys prestige and petroleum funds excellence. Rankings fit well in this search for excellence and market competition. Some universities have been denounced in newspapers and scientific journals for hiring shadow professors who spend almost no time there but agree, for a high price, to list all of their publications with that university or publish some of their papers with the affiliation to the university. The actual size of this phenomenon is still not known but probably affects only marginally the actual level of scientific research. Nonetheless, it does affect the image of the universities and the countries in the rankings produced by the Competitiveness reports and World Bank assessments of knowledge-based economy.

As previously mentioned, US News recently published the Best Arab Region Universities. This ranking, contrary to its U.S. equivalent, is based solely on the raw numbers of articles, citations, and other indicators provided by the Scopus database, another commercial venture lead by Elsevier. This means they are not scaled against the number of academic staff an institution employs. Contrary to Web of Science, Scopus seemed to welcome additional journals, some of which are questionable as later descibed. In fact, both databases seem to cover in very similar ways the scientific production in aggregate figures for statistically large datasets. When zooming down to specific countries that produce a small number of publications or even particular institutions, the number of methodological shortcomings makes things even more difficult. Still, there is no accepted standard in bibliometric evaluations and rankings are not at all favored by specialists in the field.

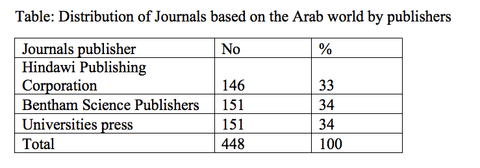

Thus university rankings should be taken for what they are: commercial activities that describe a private sector knowledge market and try to provide tools for it. The US News ranking of Arab universities has all the flaws of rankings and additionally is based on a very thin database. If we look to the 448 “Arab” journals included in the Scopus list, we find that 67 percent of them belong to two problematic publishers, Hindawi (based in Cairo) and Bentham (based in Sharija, United Arab Emirates and mentioned as a questionable publisher by Beall listing—which lists potential, possible, or probable predatory scholarly open-access publishers). Both are mentioned in the "predatory journals" in the Beall listing.[1] "Predatory journals" are those that unprofessionally exploit the author-pays model of open-access publishing (Gold OA) for their own profit (which seems quite high). Typically, these publishers spam professional email lists, broadly soliciting article submissions for the clear purpose of gaining additional income. Operating essentially as vanity presses, these publishers typically have a low article acceptance threshold, with a false-front or non-existent peer review process. Unlike professional publishing operations, whether subscription-based or ethically-sound open access, these predatory publishers add little value to scholarship, pay little attention to digital preservation, and operate using fly-by-night, unsustainable business models.

According to this ranking, the “best” Arab five universities are: King Saud University, King Abdulaziz University, King Abdullah University of Science & Technology, Cairo University, and the American University of Beirut. Beyond this overall ranking, the newspaper offers rankings in each scientific field, an approximation to academic disciplines.[2] Concerning the social science and humanities (SSH), the ranking is fundamentally flawed since most of SSH production is in Arabic and the Arabic-language journals are not indexed by Scopus. Only two journals in the list are produced in Arabic (one from Kuwait and another from Jordan)[3] among the seven journals based in the Arabic world. In the Arab world, there are around 300 academic journals in Arabic that are subsequently ignored.

Rankings are not indicative of research, nor are they used for evaluation of research even in very competitive environments. Rankings are not used for funding decisions and probably have never had any real impact on choosing a career since other features such as location, cost, proximity, and previous knowledge of an academic institution play a more important role than any ranking. They only serve a symbolic, political and highly ideological function in that they legitimize the idea of benchmarking among different universities. If some effect is to be found it is in triggering fierce controversies among academics and academic managers on the respective merits of their own institutions, discussions that never go beyond the frontiers of the small world that is concerned by the figures. Prospective pupils and families that read these rankings will probably be happy or disconcerted by their choices (future or present) but will finally give little credit to figures that related very loosely to the actual academic status and practice.

As Bourdieu once wrote, “standardization benefits the dominant,” and these rankings want to consolidate the idea of a one for all standard, a measure that fits all, independently of contents, orientation, location or resources. Instead of thinking about universities as a social institution that fit a certain environment, in terms of ecology (bio-diversity adapted to its environment), it is thought of in terms of hierarchy (how to attain the title of “the best” when competing against the 41-billion-endowment Harvard University). Limited to this elite formation function, the university becomes a caricature of itself. Effects in the country or the territory, activities beyond publishing, research, community services, participation to public debates, influence of policy decisions, contribution to local political life, dissemination of both knowledge and arts, amd social organization become invisible in these one-dimensional rankings. Even the actual contribution of individuals highly devoted and loyal to their own home institution becomes a footnote in the career of academic faculty members. Rather more worrying is the fact that promotion reports, that are produced for promotion inside universities and decide the professional death or life of candidates, are contaminated by the benchmarking and managerial view of “excellence” that obscures all other dimension that are not part of the ranking in terms of publications. Ranking is thus part of an academic “celebrity model” that operates at a global level, in a selective way, as globalization itself.

While we are not enthusiastic about any ranking, if a ranking is a must we can think about alternative ways of conducting ranking or promotion criteria for individual professors. Some principles to be taken into account:

- All indicators should be scaled against the number of academic staff a university employs.

- Bibliometrics may inform, but not replace peer review.

- Creation of a national/language portal (such as The Flemish Academic Bibliographic Database for SSH). The newly established E-marefa and Manhal are a starting point for the Arab world but they are still insufficient and it is better to have a national or an official pan-Arab organization to create such a portal.

- Benchmark the whole life cycle of research (i.e. including knowledge transfer and public/policy research activities). We admit that not all research should have an immediate relevance to local society. Thus research should be classified by temporality (research that needs time to have output [because of long fieldwork or because of political sensitivity of its content] versus research that yields quick results) and by public/policy relevance and knowledge transfer/innovation (looking at how much research income an institution earns from industry). If the trend will be kept to quantify that, indicators of public/policy activities for the relevant research should be developed, including when these activities will yield to relevant public and policy debates.

Arvanitis is a research director in Institute de recherche pour le developement (France) and Hanafi is professor at American University of Beirut.

[1] http://scholarlyoa.com/publishers/

[2] Disciplines in academia do not necessarily follow the classification by fields used in databases. Moreover, indicators are very sensitive to changes in the numbers of articles that have been indexed by the database. Finally, the production of a specific university can be strongly underestimated due to bad affiliations and wrong or different forms of writing a specific affiliation. Databases like Scopus and Web of Science have tried to correctly follow-up the denominations of affiliations of authors but there are still large parts of the production that can be missed.

[3] Few other journals publish in fact in both English and Arabic such as the Arab Gulf Journal for Scientific Research.

Comments

I contracted a freelancer to do the search engine optimisation and about a month later I checked and the site had lost a lot of traffic, bad

sign right?

Ranking Arab Universities: A Farce – The latest addition to my weekly

read